AMP of AMPs

Overview

AMP can be used to deploy and manage other AMP instances. This can be used to give a scalable and secure architecture.

- Load can be sharded across AMP instances, with each AMP instance managing a separate set of applications.

- AMP can run a different (set of) AMP instances per tenant to provide isolation. The provisioning requests can be routed to the correct instance by a master AMP, based on the tenant id.

- AMP instances can be collocated within an isolated network, to manage the applications within that network.

- AMP can be clustered for high availability - a standby node takes over automatically if the primary fails.

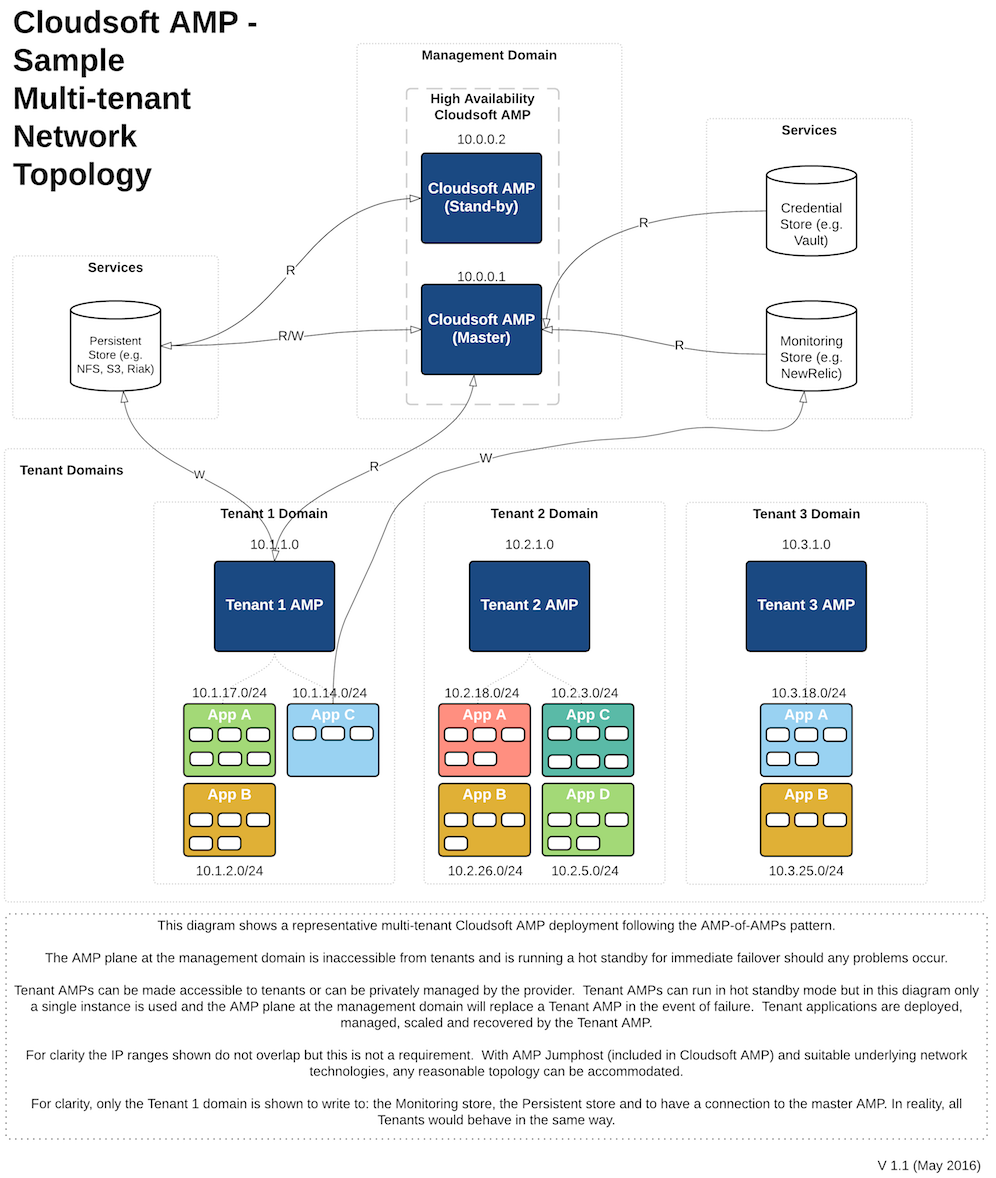

This diagram shows a representative multi-tenant Cloudsoft AMP deployment following the AMP-of-AMPs pattern:

The remainder of this chapter focuses on the use-case of multiple tenants where each tenant is a “customer”, but it could also be a line-of-business, a site with transient connectivity, or a collection of one or more deployments which requires secure isolated management.

Note that for many use cases, AMP Cluster may be preferable to the AMP of AMPs model. AMP Cluster provides more out-of-the-box management support, so is simpler, but is not as flexible; for some use cases, such as secure isolated and transient tenants, it may be useful to manually build an AMP of AMPs where required.

1. Multi-tenant service overview

It is increasingly common for a service provider to offer to their enterprise customers a set of service blueprints. These could be single-VM applications or multi-VM applications that the customer can order and that are automatically deployed. Some of these applications may also be managed automatically (i.e. offered as a dedicated SaaS, rather than allowing direct access to the underlying VMs).

The customer’s applications (and thus their VMs and services) are in an isolated network. Each tenant will have an isolated network in each cloud + region that they use. The VMs running in these isolated network will be private by default (i.e. not reachable from outside that isolated network), with options for using NAT rules, security groups, and cloud load-balancers to expose particular ports / services.

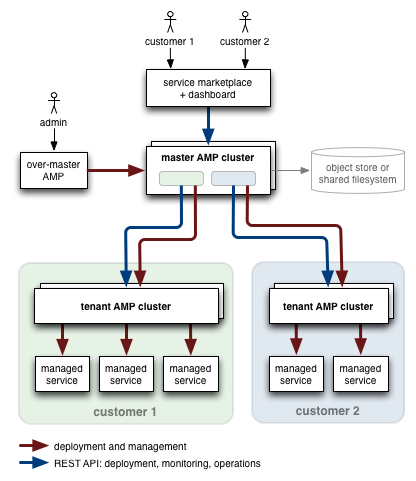

The points where end-users interact with their blueprints are:

- An application marketplace where instances of blueprints are requested and tracked

- A “controller” dashboard, which could be specific to the blueprint type. One approach is to have a custom webapp specific to the blueprint, which shows the health, metrics and operations for that blueprint.

- The system under management by the blueprint (i.e. the deployed application, such as a Cassandra cluster or a LAMP stack)

The customer never directly interacts with the management plane. Usage of the AMP web console is often limited to second-line support by the service provider. The AMP REST API is used to interact with AMP, and to invoke AMP operations from the customer dashboard or from ticketing systems etc.

2. Terminology

| Term | Definition |

|---|---|

| AMP Overmaster | AMP management plane that looks after the AMP master(s). For example, there could be separate master AMP clusters for production, staging and development. |

| AMP Master | AMP management plane that looks after the AMPs for each tenant. Provisioning requests and queries can be routed through the AMP master, which will forward them to the correct tenant AMP. |

| Tenant AMP | The AMP node(s) responsible for a given tenant in a given cloud/region. |

| AMP Cluster | An AMP management plane, which usually consists of two AMP nodes where the second is a stand-by to take over automatically if the first node fails. |

| Service provider | The company/organization offering the service to the enterprise customers. |

| Tenant | A customer of the service provider. Often an enterprise customer who may wish to run many applications in their isolated network. |

| Service blueprint | The blueprint of a service to be provisioned and optionally managed; normally represents an application which could be single-VM or a multi-VM (e.g. a Java enterprise application with JBoss app-server and MySQL database, or a MongoDB cluster). |

| Customer marketplace/dashboard | The customer-facing UI for ordering services; the customer portal. |

| Controller WAR | A webapp that is specific to a type of blueprint, used as a dashboard for instances of that blueprint. It can pick out specific attributes and operations to be exposed to the end-customer. |

| Isolated network | A network, associated with one single tenant, that isolates the VMs within it from the outside world. |

3. Management-plane overview

Each tenant’s isolated network has a set of tenant AMPs, which run within the isolated network. The tenant AMPs are responsible for deploying and managing all applications within this isolated network. The set of tenant AMPs can be grown (and shrunk) as the load changes for this customer. The set of customer applications is easily sharded across the tenant AMPs, as each application is (currently) deployed and managed independently.

The master AMP cluster manages all the tenant AMPs. The master AMP is responsible for provisioning new tenant AMPs as required, and for routing traffic to them.

When a tenant orders a new application through the customer dashboard, a REST API call is made to the master AMP. This is then routed to the least-loaded tenant AMP within appropriate isolated network.

When a new tenant is being created, the master AMP creates a new isolated network for that customer. When the first application is ordered by that tenant, a new tenant AMP is created which will then handle the application provisioning.

Example Workflow

The following steps illustrate the interactions.

- A new customer orders a new application through the customer dashboard.

- The dashboard back-end requests the application be provisioned, via the REST API of the master AMP.

- No infrastructure for this tenant yet exists, therefore the master AMP will…

- Create a new isolated network for the tenant

- Create a tenant AMP in this network (creating a VM for it)

- The master AMP forwards the application provisioning request to the tenant AMP.

- The tenant AMP provisions the application within the isolated network.

- The dashboard back-end retrieves the status of the new application via the master AMP’s REST API.

- The dashboard shows the customer the application endpoint and health information.

4. Current Status

AMP-of-AMPs is in the process of being generalised, from the customer-specific project in which it was originally created.

The current code supports deploying a master AMP cluster (i.e. standby nodes for HA), and having that deploy AMP tenants on-demand. Currently a single AMP tenant is deployed per tenant.

The recommended setup for evaluations is:

- Master AMP:

- Installed on a moderately powerful VM.

- Configured to use persistence to a local object store (if available), or to the local file system.

- [Optional] A second standby AMP instance (for HA). It would require access to the same persisted state.

- No sharding of the master AMPs (e.g. different instances for different tenants).

- One or more tenants.

- For each, the tenant AMP is deployed to its own VM.

- Deploy to a default network (e.g. no advanced networking for vCD), or to a public cloud without using isolated networks.

- Only local file-based persistence for the tenant AMP (currently).

- No HA pair for the tenant AMP.

- No sharding of the tenant AMP instances (for scalability for a given tenant).

There are a number of features that are either still being extracted from customer-specific code, still under development, or that could be added based on customer requirements:

- Over-master AMP to deploy and manage the master AMP cluster.

- For tenants with high load, deploy a set of tenant AMPs where each manages a sub-set of the tenant’s applications. Scaling back is thus not yet supported either.

- Supporting multiple versions of a given blueprint. Currently, when a new blueprint version is deployed, that will be used for all subsequent deployments. Instead, one should be able to specify the specific version of the blueprint to be used.

- Tenant AMP high availability. Currently, each tenant AMP instance does not run with a standby.

- Very soon, there will be support for specifying the persistence setting of the tenant AMP. This will allow a replacement tenant AMP to be started (semi-manually) to replace a failed node.

- We will support tenant AMP HA (i.e. deploying a standby along with the primary).

- Having a small standby pool of VMs available to quickly replace a tenant AMP could be supported. The VMs in the pool would have AMP pre-intalled. Replacing a failed node would simply involve automatically configuring the persistence directory (in the properties file), and starting AMP.

- Sharding the load on the master AMP would be possible, by running multiple instances that each handle a distinct subset of the tenants.

- Advanced networking configuration is not passed in when creating the tenant AMP. Therefore it may not ye be possible to create the tenant AMP in a non-default isolated network, or with custom VPN connection settings.

- Rebind of master AMP, when there are existing tenants, requires more testing and bug fixing. For example, the tenant may come up showing as “on-fire” if it cannot initially connect. However, when operations are performed it will try to reach the tenant AMP so these operations will succeed if the tenant AMP is healthy.

5. Customer Marketplace / Dashboard

A common deployment pattern is for the service provider to develop (or reuse) their own customer dashboard. This dashboard is the “marketplace” (i.e. the on-line store where one can browse from a catalog of service blueprints, and choose what should be deployed). The dashboard is also used by customers to view the list of running applications, to view their status, and to manage those applications.

6. Resilience / High Availability

AMP can be configured to persist its state to an object store, or to the file system (e.g. to an NFS mount). See the documentation. Any object store supported by the jclouds blobstore abstraction is supported.

AMP can run with one or more standby nodes. The standby nodes monitor the health of the AMP that is currently “master”. If it fails, the standby nodes elect a new master and this takes over management, reading the persisted state.

7. Tenant AMPs

Auto-scaling the Tenant AMPs

For a given tenant, there can be one or more tenant AMP instances.

The load on AMP is easily split across instances - sharded with an independent set of apps on each AMP instance. The number of AMP instances per tenant can be increased over time as required, based on the number of apps/VMs for that tenant.

The AMP master can choose the least-loaded tenant AMP instance when a new application is to be deployed.

A policy can trigger adding of tenant AMP instances automatically, based on metrics such as the number of applications or VMs under management.

8. Versioning

AMP can maintain multiple versions of a “service blueprint” (i.e. of the AMP services, including the class or YAML and the OSGi bundles).

Semantic versioning (semver.org) is recommended for versioning of blueprints within AMP. However, the blueprint author is responsible for choosing the version numbers, and thus for what version numbers to choose.

Deploying versioned blueprints

For an AMP-of-AMPs, the new blueprint is added to all tenant AMPs by calling the deploy_service effector on the AMP master.

Note that under-the-covers, this uses the AMP REST API of each tenant AMP to add the blueprints to the catalog (by posting to https://<endpoint>/v1/catalog).

Other Considerations

- C1) Support setting the default version of a blueprint used

- C2) Support listing blueprint versions

- C3) Support deleting blueprint versions

- C4) Installing to an existing version will replace it (an explicit “force” boolean flag may be required to prevent accidental collisions); this enables rollback

Limitations

- L1) Existing service instances cannot at present be repointed to new context paths.

- L2) Existing service instances cannot be migrated to new blueprint versions (this feature will follow, and will be the mechanism by which L1 is achieved)

Other Versioning Considerations

Many blueprints may make use of external artifacts such as Chef recipes, VM images (base OS installs), RPM files, and other resources. These will typically have their own versioning schemes. In order to ensure consistency in blueprint behaviour, it is recommended that:

- The blueprint takes URLs for all such external resources as configuration parameters

- These configuration parameters be supplied in the versioned blueprint YAML for the service

- The URLs for all external resources include the version of that resource, so that the contents at that URL is effectively write-once

This ensures that a blueprint version never changes. If an updated recipe or artifact is desired as part of a blueprint, a new blueprint should be registered. As a separate feature, existing blueprints can also be updated to that version. This allows us to track exactly which artifacts/versions are in use as part of which service instances.

9. Deployment and Management

Master AMP

Installation of Master AMP

Unpack the amp-of-amps tar.gz and unpack. If you require the download, please contact Cloudsoft.

Configuration of Master AMP

All setup can be done by creating a brooklyn.properties containing the runtime configuration.

This can be in ~/.brooklyn/brooklyn.properties (the default) or another file specified on the command line with --localAMPProperties FILE.

The recommended settings are described below.

Redistributable Archive

The AMP-of-AMPs redistributable archive must be available at runtime in order to set up the tenants. The location of this archive can be set using:

VERSION=0.2.0-SNAPSHOT

ampofamps.tenant.amp.download_url=http://path/to/ampofamps-${VERSION}-dist.tar.gzIf this key is not specified, the master AMP blueprint will default to looking in

/tmp/ampofamps-${VERSION}-dist.tar.gz (on the master AMP machine, where it will be

uploaded to the tenant AMP machine).

Addition archives can be added within the archive in lib/dropins.

Security and Access Control

Test Environments

For localhost-only dev/test deployments, see the “Debug” section. One can include in the master AMP blueprint:

use_localhost: trueAlternatively, as a lightweight form of authorization using statically defined users

and passwords is possible as follows

(note that brooklyn.webconsole.security.provider must not be set):

brooklyn.webconsole.security.users=admin,myname

brooklyn.webconsole.security.user.admin.password=P5ssW0rd

# See /operations/configuration/brooklyn_cfg.html#authentication

# for details of generating hashed password, using `generate-password.sh --user myname`

brooklyn.webconsole.security.user.myname.salt=Qshb

brooklyn.webconsole.security.user.myname.sha256=72fa67c29ceec55858fdbd9df171733236aea00f556f3ccf92566a21f36ded19Production Environments

To require SSL encryption, set:

brooklyn.webconsole.security.https.required=trueIf using HTTPS, set the certificate which the AMP server will serve by including:

brooklyn.webconsole.security.keystore.url=...

brooklyn.webconsole.security.keystore.password=...

# alias is optional, if keystore has multiple certs

brooklyn.webconsole.security.keystore.certificate.alias=...To use LDAP for authorization, set:

# Substitute the IP, password and realm for those in your own LDAP

brooklyn.webconsole.security.ldap.url=ldap://1.2.3.4:389

brooklyn.webconsole.security.ldap.password=mypassword

brooklyn.webconsole.security.ldap.realm=ou\=users,dc\=acme,dc\=com

brooklyn.webconsole.security.provider=brooklyn.rest.security.provider.LdapSecurityProvider

brooklyn.entitlements.global=io.cloudsoft.amp.entitlements.LdapEntitlementManagerSSH Key

Ensure that the master AMP machine has an SSH key in the default location of ~/.ssh/id_rsa or

~/.ssh/id_dsa. If necessary, generate a new key with ssh-keygen -t rsa -N "" -f ~/.ssh/id_rsa.

Optionally, to use a different key location then set:

# The publicKeyFile can be omitted if it is just the private key + .pub

brooklyn.location.jclouds.privateKeyFile=~/.ssh/amp_rsa

brooklyn.location.jclouds.publicKeyFile=~/.ssh/amp_rsa.pubNote that if using non-jclouds locations (e.g. bring-your-own-nodes), then use brooklyn.location.privateKeyFile=

instead of the brooklyn.location.jclouds prefix.

Master AMP High Availability [optional]

To run with high-availability, you must also turn on persistence and set the high-availability mode.

All instances should point at the same persistence store (e.g. a blobstore),

with one server nominated as the initial master and others as the failover server

(or alternatively you can use auto for all to let the servers elect the master,

but in that case you will have to manually start the master AMP blueprint at the master node).

First you must configure the HA object store on each machine by placing this in ~/.brooklyn/brooklyn.properties,

replacing the object store target and credentials with your own:

brooklyn.location.named.amp-master-persistence-store=jclouds:openstack-swift:https://ams01.objectstorage.softlayer.net/auth/v1.0

brooklyn.location.named.amp-master-persistence-store.identity=abcd

brooklyn.location.named.amp-master-persistence-store.credential=0123456789abcdef...

brooklyn.location.named.amp-master-persistence-store.jclouds.keystone.credential-type=tempAuthCredentialsYou can then set the following brooklyn.properties:

brooklyn.persistence.location.spec=named:amp-master-persistence-store

brooklyn.persistence.dir=amp-master-debugThe dir string can be anything you like, to uniquely identify the bucket that a

given AMP plane uses inside the store; the -debug suffix is given as an example.

(Alternatively you can apply these as command-line arguments as

--persistenceLocation named:amp-master-persistence-store

and --persistenceDir amp-master-debug.

If you prefer to run without persistence, pass --persist disabled, or if you need to wipe the persistence store,

with all other servers stopped, run --persist clean.)

In a high-availability deployment, all the AMP servers must have access to the same

SSH public and private key. The easiest way to do this is to ensure that

~/.ssh/id_rsa and ~/.ssh/id_rsa.pub are the same across all master AMP servers.

For more information on configuring persistence, see the persistence docs.

Running Master AMP

We will launch an AMP instance, which will be the master AMP. We will deploy

the master AMP application into this AMP instance. Unlike most entities, deploying

the MasterAmp entity does not deploy a separate software process. Instead, it

just runs code in the local AMP instance that exposes effectors for creating tenants,

deploying services, etc. When we subsequently invoke those effectors, the MasterAmp

will create more entities for the tenants - these really will launch new VMs and

processes for the tenants.

Start the AMP instance with the following command:

server1% bin/amp.sh launchThis will launch the AMP console, by default on localhost:8081,

or if security has been configured then on *.*.*.*:8443.

In the console, you can access the catalog and deploy applications to

configured locations.

If using an HA cluster, then after a short pause run the same command on additoinal servers:

server2% bin/amp.sh launch

server3% bin/amp.sh launchThe above sequence will force server1 to be the master. The selection of the master can also be

triggered explicitly using the --highAvailability [master|standby|auto] CLI argument.

(When this argument is omitted, it runs in auto-detect mode, where the first

server will become the master and subsequent servers will become standby servers.)

Many other CLI options are available, identical to the AMP CLI options.

These are described by running bin/amp.sh help launch.

On First Run

The first time master AMP is run, you will need to deploy the MasterAmp blueprint

into that AMP node (i.e. to turn that AMP node into the “Master AMP”). On subsequent

runs if restoring from the persisted state this should not be done as the

application will be restored automatically.

From the web-console (of the primary, if running in HA mode), add a new application with the YAML below:

location: localhost

name: Master AMP

services:

- type: io.cloudsoft.ampofamps.master.MasterAmpAlternatively, the first time master AMP is run, you can pass --master-amp at the CLI

(to the instance chosen as primary for HA, e.g. server1 above).

Runtime Operations

The master AMP entity exposes effectors, including add_blueprint, create_tenant and

deploy_service.

These take a number of parameters, documented fully in the GUI (clicking on the effectors tab).

Some brief examples of invoking these via curl are shown below:

Adding New Blueprints

The master AMP entity exposes an effector that registers new blueprints with the master and all tenant AMPs.

The effector expects a JSON object with a single field, named “blueprint”, containing the YAML blueprint to be registered. For example, to invoke it with curl:

# These constants should be set for all curl commands.

# The id is that of the relevant entities within the master AMP blueprint instance.

# Note: command below assumes node.js is insalled

export MASTER_BASE_URL=https://localhost:8443

export AMP_MASTER_APP_ID=`curl -s $MASTER_BASE_URL/v1/applications | xargs -0 node -e 'console.log(JSON.parse(process.argv[1])[0].id);'`

export AMP_MASTER_ENTITY_ID=$AMP_MASTER_APP_ID

curl \

--data-urlencode blueprint@/path/to/app.yaml \

$MASTER_BASE_URL/v1/applications/${AMP_MASTER_APP_ID}/entities/${AMP_MASTER_ENTITY_ID}/effectors/add_blueprintWhere /path/to/app.yaml contains a blueprint, for example:

brooklyn.catalog:

id: jboss

version: 1.0

services:

- type: brooklyn.entity.webapp.jboss.JBoss7ServerThe new blueprint will be registered with all subsequently deployed tenant AMPs.

The YAML can also reference OSGi bundles that will be loaded when the blueprint is used. For example, it could look like:

brooklyn.catalog:

id: cassandra

version: 1.0.0

services:

- type: acme.amp.Cassandra

brooklyn.libraries:

- http://acme.com/osgi/acme/amp/cassandra/1.0.0/acme_amp_cassandra_1.0.0.jarCreate a New Tenant

To create a new tenant:

curl \

--insecure \

--user admin:password \

-H "Content-Type: application/json" \

-d '{

"tenant_id": "00001",

"tenant_name": "mpstanley",

"tenant_location": "softlayer:ams01",

"tenant_location_account_identity": "yourIdHere",

"tenant_location_account_credential": "0123456789abcdef0123456789abcdef0123456789abcdef0123456789abcdef"

}' \

$MASTER_BASE_URL/v1/applications/${AMP_MASTER_APP_ID}/entities/${AMP_MASTER_ENTITY_ID}/effectors/create_tenant\?timeout=0This returns an AMP “activities” object as JSON including an id field whose status and result can be

tracked at /v1/activities/ID. (If timeout=0 is omitted, the request blocks until completed and returns the result,

but that is not recommended here as provisioning can take several minutes and the HTTP connection will probably timeout first!)

Usage notes:

- The

tenant_nameis only needed for operator convenience. - The

tenant_locationsays where the tenant AMP instance should be created. Thetenant_location_account_identityandtenant_location_account_credentialgive the credentials for this location. These credentials are not stored by the tenant AMP. - When creating a new tenant, the catalog of the new tenant AMP instance will be overwritten with those configured at the master AMP.

Deploy Service

To deploy a service (i.e. a new application instance) for a given tenant:

curl \

--insecure \

--user admin:password \

-H "Content-Type: application/json" \

-d '{

"tenant_id": "00001",

"tenant_name": "mpstanley",

"tenant_service_id": "mpstanley-jboss1",

"service_display_name": "jboss 1",

"tenant_location": "localhost",

"tenant_location_account_identity": "yourIdHere",

"tenant_location_account_credential": "0123456789abcdef0123456789abcdef0123456789abcdef0123456789abcdef",

"blueprint_type": "simulated-jboss-entity"

}' \

$MASTER_BASE_URL/v1/applications/${AMP_MASTER_APP_ID}/entities/${AMP_MASTER_ENTITY_ID}/effectors/deploy_service\?timeout=0Again, this returns an AMP “activities” object as JSON including an id field whose status and result can be

tracked at /v1/activities/ID.

Usage notes:

- The mandatory fields are

tenant_id,tenant_locationandblueprint_type. - If no tenant exists for the

tenant_idwhendeploy_serviceis invoked, the tenant AMP will be created using the same credentials. - The

tenant_nameis only needed for operator convenience.

It is ignored if the tenant AMP already exists. - The

tenant_locationdetermines where the application will be deployed. This (and the credentials) are not required to match those of thecreate_tenantcall. The credentials are not cached. - The

tenant_service_idandservice_display_nameparameters are optional and are for - operator/API convenience.

The

tenant_service_idin particular is useful to query subsequently, as it is set in the config of the service entity. (Alternatively you can poll the task response and you will get the tenant or service entity ID in the result once completed.)

Tenant Lookup

There is a lookup effector on the master AMP entity which takes a single argument,

the id to search for among the internal IDs of all entities and the caller-supplied

tenant_id and tenant_service_id fields. This can be a regular expression.

The result is a list of summary info on all matching entities, including fields

tenant_url, tenant_url_user and tenant_url_password for tenant AMP instances,

and mirrored_entity_url{,_user,_password} for mirrored service instances.

For example, this will lookup everything associated with the tenant_id “00001”:

curl \

--insecure \

--user admin:password \

-H "Content-Type: application/json" \

-d '{ "id": "00001" }' \

$MASTER_BASE_URL/v1/applications/${AMP_MASTER_APP_ID}/entities/${AMP_MASTER_ENTITY_ID}/effectors/lookupStopping Tenants

The stop effector exists on all nodes. However stopping a tenant is possible only when there

are no services running. Likewise stopping everything (the root entity) on the master AMP works

only if there are no tenants running. To forcibly shut down a tenant there are two effectors

that can be used:

stop_node_but_leave_apps- shuts down the tenant but leaves all services runningstop_node_and_kill_apps- shuts down the tenant and stops all running services

To apply the effectors at once for all running tenants use the Tenants entity (which is a child of master AMP).

Analogously the effectors there are:

stop_tenants_but_leave_services- shuts down all tenants but leaves their services runningstop_tenants_and_kill_services- shuts down all tenants and stops all running services

Versioning

Blueprint Versioning

The following is a very simple illustration of deploying and upgrading a versioned blueprint.

The YAML for the blueprint could look like:

brooklyn.catalog:

id: machine-entity-with-version

version: 1.1

services:

- type: brooklyn.entity.machine.MachineEntity

brooklyn.config:

postLaunchCommand: echo "launched v1.0" >> /tmp/amp-provisioning-log.txtThis could be deployed and used by a tenant:

curl \

--data-urlencode blueprint@/path/to/app-v1.0.yaml \

$MASTER_BASE_URL/v1/applications/${AMP_MASTER_APP_ID}/entities/${AMP_MASTER_ENTITY_ID}/effectors/add_blueprint

curl \

--insecure \

--user admin:password \

-H "Content-Type: application/json" \

-d '{

"tenant_id": "00001",

"tenant_name": "mpstanley",

"tenant_location": "localhost",

"tenant_location_account_identity": "yourIdHere",

"tenant_location_account_credential": "0123456789abcdef0123456789abcdef0123456789abcdef0123456789abcdef",

"blueprint_type": "machine-entity-with-version",

"service_display_name": "machine 2" }' \

$MASTER_BASE_URL/v1/applications/${AMP_MASTER_ID}/entities/${AMP_MASTER_ID}/effectors/deploy_service\?timeout=0The blueprint might then be updated, for example to the YAML shown below (note the change

in version number, and the change in the postLaunchCommand):

brooklyn.catalog:

id: machine-entity-with-version

version: 1.1

services:

- type: brooklyn.entity.machine.MachineEntity

brooklyn.config:

postLaunchCommand: echo "launched v1.1" >> /tmp/amp-provisioning-log.txtThe new YAML could be deployed:

curl \

--data-urlencode blueprint@/path/to/app-v1.1.yaml \

$MASTER_BASE_URL/v1/applications/${AMP_MASTER_APP_ID}/entities/${AMP_MASTER_ENTITY_ID}/effectors/add_blueprintA subsequent deploy_service call (identical to that above) will use the updated YAML. The contents of

/tmp/amp-provisioning-log.txt would be “launched v1.0” and then “launched v1.1”.

To Upgrade an AMP

The preferred way to upgrade (or rollback) the version of AMP or AMP is to drive this via an AMP management plane for that cluster. In other words, to upgrade a Tenant AMP, drive it from Master AMP; and to upgrade a Master AMP, drive it from Overmaster.

There are two types of upgrades:

-

For a single node, in-place, use the

upgradeeffector on the node entity: this spawns a new node on the same machine, ensuring that it can rebind in HOT STANDBY mode, then stops both nodes, replaces the older version files with the newer files, and restarts the node. -

For a cluster, use the

upgradeClustereffector on the node entity: this creates a new node in the cluster, then additional new nodes, elects the first new node as the master, then stops the old nodes.

Note that persistence must be configured for both, either in the start script

or with brooklyn.config of the form

brooklynnode.launch.parameters.extra: --persist auto --persistenceDir /tmp/brooklyn-persistence-example/`.10. Troubleshooting

Known issues

The known issues include:

- Whenever a new tenant AMP is starting, the top-level master AMP entity incorrectly shows the “on-fire” icon. The icon will return to normal once the tenant AMP has started.

- If a Tenant AMP is killed unexpectedly, there is excessive logging of the form:

WARN Setting AmpNodeImpl{id=xMZU6UaN} on-fire due to problems when expected running, up=false, not-up-indicators: {service.process.isRunning=The software process for this entity does not appear to be running}`- On restarting master AMP, rebinding to the persisted state, if the tenant AMP is not reachable then it is marked as “on-fire”. It will remain in this state even if the tenant AMP subsequently becomes reachable again. No operations can be invoked on the tenant AMP (through the master AMP) when it is in this state!

- After restarting AMP (such that it rebinds to persisted state), the activity history for those existing entities will no longer be available.

- There is no simple way to retrieve the list of deployed blueprints programmatically.

- There is inconsistent naming for effectors: MasterAmp uses underscore_effector_names, but the TenantGroup uses camelCaseEffectorNames. These will be changed soon.

FAQ

Where is the log and debug information for master AMP?

The Master AMP writes its logs to *.debug.log.

The Master MAP web-console is the easiest way to see the overall status, and to drill into activity history. The activity history will show the effector history, including success/fail. Selecting a row will show details of that activity including failure info.

How do I connect to web console of Tenant AMP?

In the master AMP web console, select the tenant AMP and click on the Sensors tab.

The brooklynode.webconsole.url sensor gives the URL.

To get the web console login credentials of the tenant AMP, click on the Summary tab and

expand the Config section. The brooklynnode.managementPassword config key gives the

password to use. The username will be “admin”.

How do I find the tenant AMP logs?

You will need to SSH to the machine where the tenant AMP is running, and go to the run directory. There you will find the log files.

In the master AMP web console, select the tenant AMP entity and look at its Sensors.

Get the values for the host.sshAddress and the run.dir.

Use these to ssh to the tenant AMP machine, and look in the run directory for the

console and *.debug.log files.

If the run.dir sensor has not been set, then it means it failed at an earlier stage

(e.g. during install). You can look at the install.dir sensor to find where the

install artifacts should be.

How do I get a list of deployed blueprints?

Unfortunately there is not yet a simple way to retrieve the list of deployed blueprints programmatically.

In the web console (of master AMP or a tenant AMP), click on the Catalog tab, and the on Entities (on the left). This will expand to show the list of deployed blueprints. Click a blueprint’s name to see additional details. The “Plan” shows the YAML that was deployed.

Did my async effector succeed?

Effectors invoked (via REST api) with \?timeout=0 are asynchronous.

The response will be the JSON for a task being executed. It will include the id,

a description such as "Invoking effector create_tenant...", and a

"currentStatus" (most likely of "In progress").

You can use the Master AMP web-console to view the task (by going to the Applications tab, selecting the Master AMP top-level in the tree, selecting the Activites tab, and clicking on the appropraite row).

Alternatively, you can use the REST API to retrieve the task details:

TASK_ID=<id returned in json>

curl $MASTER_BASE_URL/v1/activities/${TASK_ID}Did my synchronous effector succeed?

Effectors invoked without \?timeout=0 are synchronous (i.e. blocking).

The JSON response will be the result of the effector. For example, add_blueprint returns [].

On failure, a JSON response describing the failure will be returned.

My blueprint won’t deploy

If the yaml is invalid, you’ll get a response such as:

{"message":"Invalid YAML: null; mapping values are not allowed here; in 'reader', line 6, column 13:\n - type: type: brooklyn.qa.load.SimulatedJBos ... \n ^"}The following curl arguments can also be useful to diagnose problems. It will show the HTTP status code, and will follow redirects:

curl -sL -w \"%{http_code} %{url_effective}\\n\" ...My Tenant AMP did not start on localhost

If you run Master AMP without the configuration use_localhost: true, then it does not expect

you to deploy Tenant AMPs to localhost. If you are using a global ~/.brooklyn/brooklyn.properties

then the Tenant AMP will also pick this up. It will therefore use the same persistence store.

With default settings, it will therefore become a standby node for the Master AMP!

There are three ways you can see his:

- In the master AMP, on the Home tab you’ll see an additional server listed as status “Standby”.

- When opening the tenant AMP web console, a popup will inform you that the node is in “standby”, and you will be re-directed to the master AMP! (See “How do I connect to web console of Tenant AMP?”)

- In the tenant AMP logs, it will say that the instance has stated as a standby. (See “How do I find the tenant AMP logs?”)

My Tenant AMP did not start

If the Tenant AMP fails to start (i.e. it shows “on-fire” in the Master AMP web-console), the following should be investigated.

- In the web-console, select the Tenant AMP, click on Activity tab. Did the “start” task fail? Drill into the task by clicking on it, and then looking at its “Children Tasks”, which can also be drilled into by clicked on them.

- Look at the logs of the Tenant AMP itself. (See “How do I find the tenant AMP logs?”)

My tenant’s service did not start

If the application created by deploy_service failed to start correctly, you can

first use the master AMP web-console to view the “mirrored entity” that represents

the application instance. This shows the sensors of the top-level application.

To drill into the activities and further details of the application, you’ll need to connect to the tenant AMP’s web-console (see “How do I connect to web console of Tenant AMP?”). From there, you can select the relevant application and its child entities, view the Sensors tab, and view the Activity tab to see the commands executes when launching the application.

11. Running in Debug Mode

A special debug mode where all deployments are localhost is available. It is not necessary to supply any cloud credentials, and all tenants and services will be created on localhost. (This requires passwordless localhost SSH access.)

This is configured on the master AMP by setting the config key ampofamps.debug.use_localhost

set to true. (The short name is use_localhost.)

From the Command Line

For example, the YAML could look like:

location: localhost

name: Master AMP

services:

- type: io.cloudsoft.ampofamps.master.MasterAmp

brooklyn.config:

use_localhost: true

skip_controllers: true

# Additional optional config includes:

tenant_amp_use_https: false

download_url: file:///tmp/ampofamps-0.2.0-SNAPSHOT-dist.tar.gz

brooklyn.mirror.poll_period: 5sYou can launch this using the command below (where the specified file contains the YAML above):

bin/amp.sh launch --app amp-master-debug.yamlNote that the effectors are then available on the child of the blueprint, not at the root. This will impact the IDs needed in the curl commands. The following command will be useful (to find the id of the first child of the app:

export AMP_MASTER_ENTITY_ID=`curl -s $MASTER_BASE_URL/v1/applications/$AMP_MASTER_APP_ID/entities/ | xargs -0 node -e 'console.log(JSON.parse(process.argv[1])[0].id);'`Alternatively, one can launch AMP without any applications, and then deploy via the REST API or web-console.

Eclipse

To run the project from within Eclipse, first ensure that the download_url file exists, and

then create a launch configuration for io.cloudsoft.ampofamps.AmpOfAmpsMain with the arguments

launch --persist disabled --app src/test/resources/master-amp-localhost.yaml and,

recommended, with JVM arguments -Xmx1024m -Dbrooklyn.location.localhost.address=127.0.0.1.

Tips

- If you are rebuilding the archive to deploy to localhost, you may have to clear the local AMP cache:

rm -rf /tmp/brooklyn-`whoami`/installs/AmpNode_*- For a

blueprintTypewhich starts up very quickly (doing nothing) you can use:

blueprintType: io.cloudsoft.ampofamps.blueprints.NoOp