3-2 Sensors, Effectors, Config

In this exercise we will expand the blueprint from the previous exercise to show more advanced Cloudsoft AMP techniques for attaching useful information, operations, and configuration. Specifically you will learn how to:

- Collect individual resources from Terraform for AMP management

- Based on resource type or tags or other information

- Attaching connection information

- Extend the AMP management of individual resources

- Custom “off-box” and “on-box” sensors to report the size of data on the EFS

- Custom effectors to improve the SSH access mechanism after creation

- Custom startup logic to improve the SSH access mechanisms at creation time

This lays the foundation for in-life automation and compliance, covered in the next exercise, using sensors to trigger effectors and other behavior through policies.

Adding custom sensors using containers

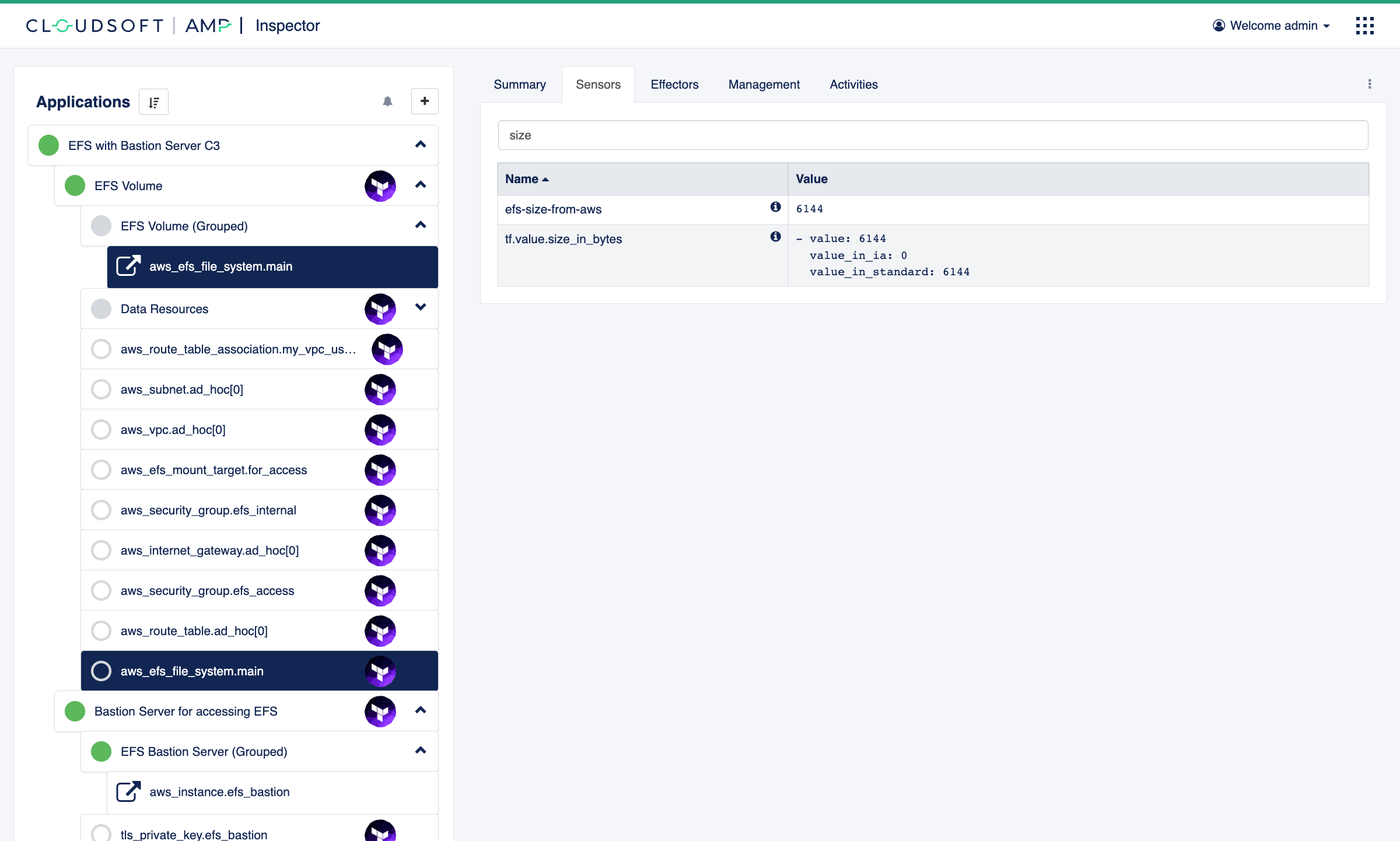

You may have noticed in the previous exercise, that the aws_efs_file_system.main Terraform resource

under the “EFS Volume” Terraform entity included a sensor for tf.value.size_in_bytes, returning:

- value: 6144

value_in_ia: 0

value_in_standard: 6144

AMP by default will refresh Terraform periodically. This metric is included by AWS as an attribute on the resource and so retrieved by Terraform as part of the refresh, and pulled in to AMP as a sensor.

This data is interesting, in particular the value key in the first element of the list;

from an AMP policy perspective, however, it will be more useful to extract that field as a number in its own sensor.

We will do this by attaching management logic to run a container using the JSON query tool jq

whenever the sensor tf.value.size_in_bytes changes.

The bash encode instruction takes that map value, serialized as json, then escaped and wrapped in bash double quotes;

then this is passed to jq using JSON-path syntax to extract the value from the first element.

We will specify an initializer of type workflow-sensor, shown below.

The next section will show how to deploy this.

- type: workflow-sensor

brooklyn.config:

sensor:

name: efs-size-from-aws

type: integer

triggers:

- tf.value.size_in_bytes

steps:

- transform size_json = ${entity.sensor['tf.value.size_in_bytes']} | bash

- container stedolan/jq echo ${size_json} | jq '.[0].value'

- return ${stdout}

on-error:

- clear-sensor efs-size-from-aws

Experiment with workflow in the UI

⌃

Experiment with workflow in the UI

⌃

Collecting and extending discovered resources

If we were attaching that to an entity defined in the AMP blueprint, we could specify that in a list of brooklyn.initializers

on any entity. Here, however, we want to attach it to an entity discovered at runtime,

when AMP automatically pulls in the resources and data objects that Terraform creates.

To do this, we need to let AMP know how to attach to a dynamic entity.

For this we will use three new AMP building blocks:

- The

brooklyn.childrenblock lets us create rich hierarchies to model the topology of the managed application, allowing simpler high-level views and aggregate operations targeting different logical components of deployments that might have complex set of resources - The

org.apache.brooklyn.entity.group.DynamicGroupentity collects and manages entities based on conditions - The

org.apache.brooklyn.entity.group.GroupsChangePolicypolicy declares rules for what to do to entities collected by the group, such as attaching sensors, effectors, and other policies

Our “EFS Volume” service previously had 5 lines; below, you can see the additions to that service.

This adds a child node of type DynamicGroup, configured to accept as a member any resource with

aws_efs_file_system.main set as its tf.resource.address config,

and a GroupsChangePolicy policy which will apply the workflow-sensor initializer above to any member found:

- name: EFS Volume

type: terraform

id: efs-volume

brooklyn.config:

tf.configuration.url: https://docs.cloudsoft.io/tutorials/exercises/3-efs-terraform-deep-dive/3-1/efs-volume-tf.zip

brooklyn.children:

- type: org.apache.brooklyn.entity.group.DynamicGroup

name: EFS Volume (Grouped)

brooklyn.config:

dynamicgroup.entityfilter:

config: tf.resource.address

equals: aws_efs_file_system.main

brooklyn.policies:

- type: org.apache.brooklyn.entity.group.GroupsChangePolicy

brooklyn.config:

member.initializers:

- type: workflow-sensor

brooklyn.config:

sensor:

name: efs-size-from-aws

type: integer

triggers:

- tf.value.size_in_bytes

steps:

- transform size_json = ${entity.sensor['tf.value.size_in_bytes']} | bash

- container stedolan/jq echo ${size_json} | jq '.[0].value'

- return ${stdout}

on-error:

- clear-sensor efs-size-from-aws

The full blueprint is here.

Redeploy

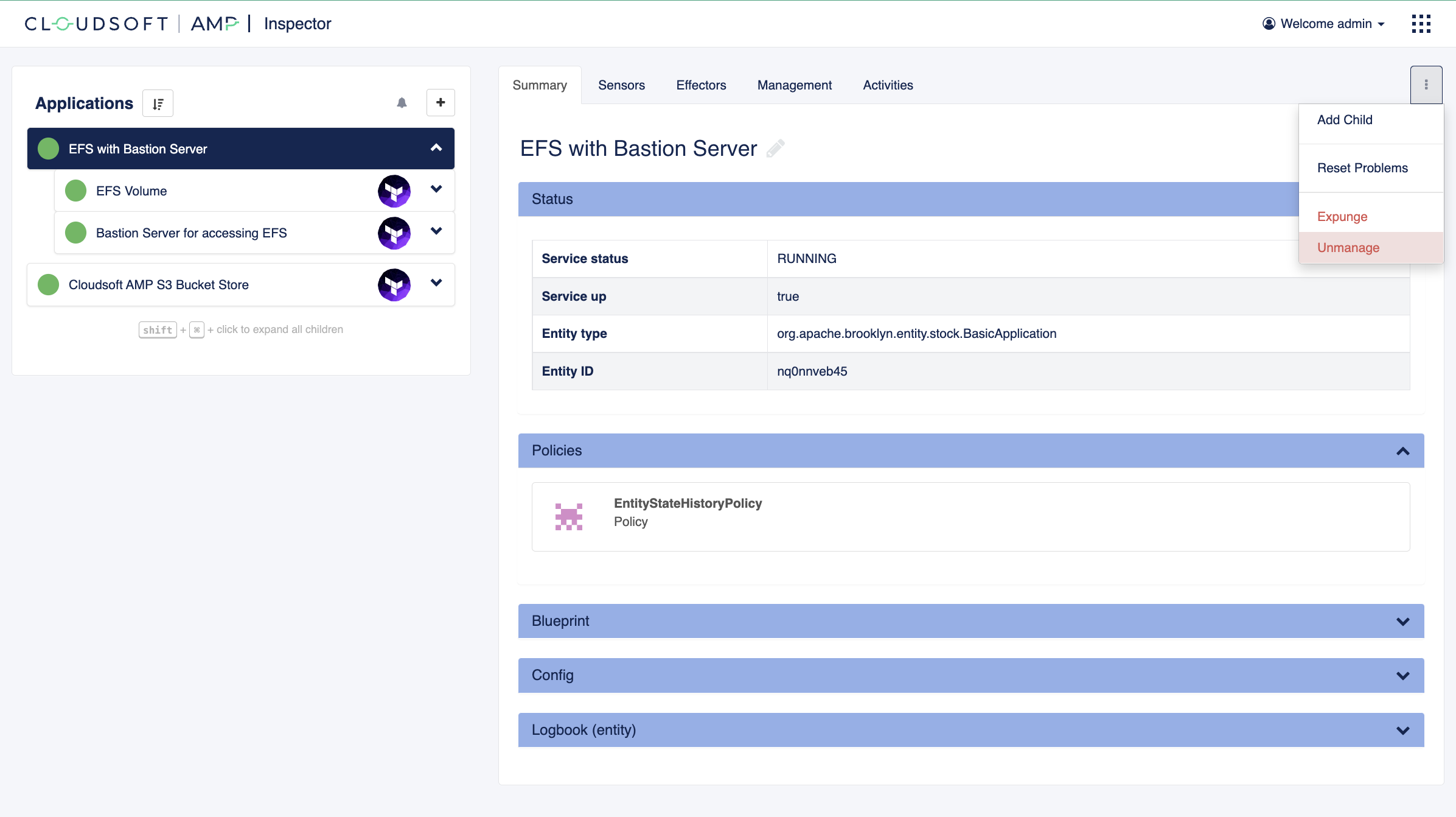

To efficiently update our deployment, we will “unmanage” the current “EFS with Bastion Server” deployment and deploy the updated blueprint. AMP “unmanage” will leave the Terraform-managed infrastructure intact, and by re-deploying with the same bucket, Terraform will detect that it is already present and report it to AMP.

- Select the “EFS with Bastion Server” application in the Inspector

- Click the three-dots button towards the top-right and select “Unmanage”.

- Copy the blueprint and paste it into the Composer CAMP Editor. This is essentially the same as using the catalog then “Edit in Composer”, but faster now that you are familiar with the basics.

- Switch to the graphical composer and set the

bucket_nameanddemo_nameto the same values as in the previous deployment. - Click “Deploy” and confirm.

Copy blueprint code from previous deployments

⌃

Copy blueprint code from previous deployments

⌃

This deploys in AMP more quickly because there is nothing to provision in the cloud,

although it may take a minute or two to initialize Terraform and read state and existing resources.

Once deployed, you will see the new “EFS Volume (Grouped)” entity as a child of the “EFS Volume” entity,

and the AMP entities found that match the filter underneath.

Here there should be one entity collected: aws_efs_file_system.main will show up as a member of our group

in addition to being a child of “EFS Volume”,

and it should have our custom sensor efs-size-from-aws.

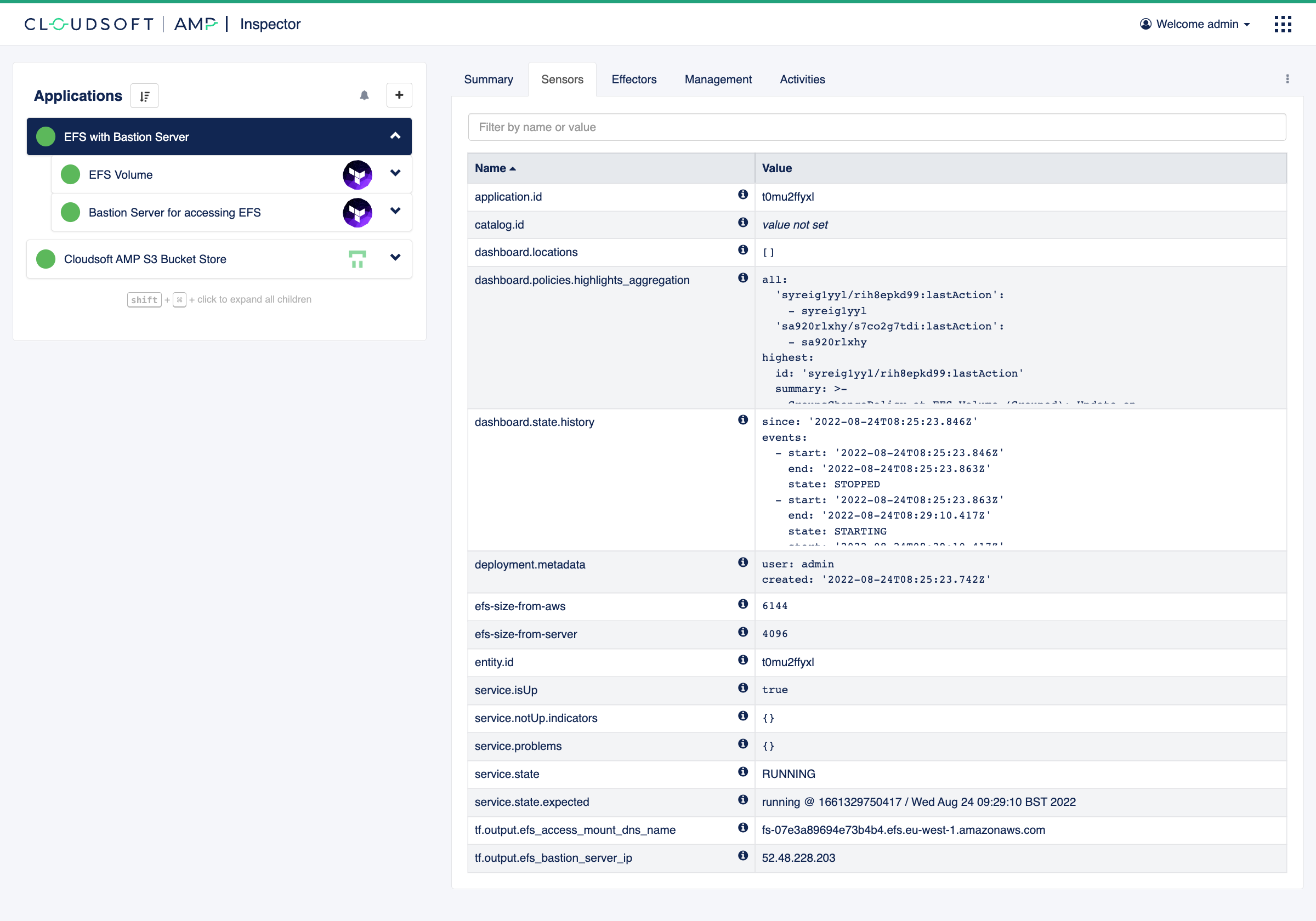

Adding on-box sensors using SSH

If you’ve run through the exercises quickly, you may notice a minor problem: AWS only updates its size report every hour, so 6 kB might – correctly – be computed as the efs-size-from-aws for up to an hour. Eventually it will update, but this illustrates one reason why it is often useful to take telemetry from multiple sources, indicate the provenance, e.g. “from-aws”, and be able to inspect (in the Activities view) when and where data originated.

The workflow-sensor with the container step runs “off-box” – in a container – and can be used to query information

from many sources, pulling from common monitoring systems, CMDBs, etc.

For the EFS size, a more reliable source will be to run “on-box”, connecting to the bastion server

and using du to compute actual disk usage there.

To do this, we need to set up a similar DynamicGroup for the bastion server,

and this time our GroupsChangePolicy will include:

-

in

member.initializers,workflow-sensorwith ansshstep similar to thecontainerstep, configured to run every 2 minutes running the commanddu --block-size=4096 | tail -1 | awk '{print $1 * 4096}'and updating a temporary file as a breadcrumb -

in a new block

member.locations, a typeSshMachineLocationwhich associates “locations” in AMP’s model with entities, informing AMP of the connection details for the server

The “Bastion Server” in our blueprint gets a brooklyn.children entry similar to what we did

for the “EFS Volume”, as follows:

- name: Bastion Server for accessing EFS

type: terraform

brooklyn.config:

tf.configuration.url: https://docs.cloudsoft.io/tutorials/exercises/3-efs-terraform-deep-dive/3-1/efs-server-tf.zip

tf_var.subnet: $brooklyn:entity("efs-volume").attributeWhenReady("tf.output.efs_access_subnet")

tf_var.efs_security_group: $brooklyn:entity("efs-volume").attributeWhenReady("tf.output.efs_access_security_group")

tf_var.efs_mount_dns_name: $brooklyn:entity("efs-volume").attributeWhenReady("tf.output.efs_access_mount_dns_name")

tf_var.ami_user: ec2-user

brooklyn.children:

- type: org.apache.brooklyn.entity.group.DynamicGroup

name: EFS Bastion Server (Grouped)

brooklyn.config:

dynamicgroup.entityfilter:

config: tf.resource.address

equals: aws_instance.efs_bastion

brooklyn.policies:

- type: org.apache.brooklyn.entity.group.GroupsChangePolicy

brooklyn.config:

member.locations:

- type: org.apache.brooklyn.location.ssh.SshMachineLocation

brooklyn.config:

user: $brooklyn:config("tf_var.ami_user")

address: $brooklyn:parent().attributeWhenReady("tf.output.efs_bastion_server_ip")

privateKeyData: $brooklyn:parent().attributeWhenReady("tf.output.efs_bastion_server_private_key")

member.initializers:

- type: workflow-sensor

brooklyn.config:

sensor:

name: efs-size-from-server

type: integer

period: 2m

steps:

- ssh du --block-size=4096 /mnt/shared-file-system | tail -1 | awk '{print $1 * 4096}'

- let result = ${stdout}

- ssh date > /tmp/cloudsoft-amp-efs-size-from-server.last-date

- return ${result}

We will do two more things before we redeploy.

Promoting sensors with enrichers

There are a lot of resources, entities, and sensors, even for this simple deployment. This is often a fact of life with cloud services, especially as applications become more complicated. AMP’s ability to represent topology allows this complexity to be buried.

For that to work well, we need to promote important sensors so they are easier to find,

in addition to the low-level collection and processing of them done previously.

Cloudsoft AMP’s “enrichers” are logic which re-processes sensors.

We will start with an “aggregator” at each of our dynamic groups

to take the efs-size-* sensors from the member it collects.

As there can be multiple members to such a group,

we will tell it to transform that list by taking the “first” sensor it finds,

and make it available on that entity.

We will also assign an id to each group, so we can reference it next.

At the EFS Volume (Grouped):

id: efs-volume-grouped

brooklyn.enrichers:

- type: org.apache.brooklyn.enricher.stock.Aggregator

brooklyn.config:

enricher.sourceSensor: efs-size-from-aws

enricher.targetSensor: efs-size-from-aws

transformation: first # list, sum, min, max, average

And at the EFS Bastion Server (Grouped):

id: efs-bastion-server-grouped

brooklyn.enrichers:

- type: org.apache.brooklyn.enricher.stock.Aggregator

brooklyn.config:

enricher.sourceSensor: efs-size-from-server

enricher.targetSensor: efs-size-from-server

transformation: first

Next, let us “propagate” these sensors – and a couple others – at the root of our application, pulling the key sensors from the entities in question:

brooklyn.enrichers:

- type: org.apache.brooklyn.enricher.stock.Propagator

brooklyn.config:

producer: $brooklyn:entity("efs-volume-grouped")

propagating:

- efs-size-from-aws

- type: org.apache.brooklyn.enricher.stock.Propagator

brooklyn.config:

producer: $brooklyn:entity("efs-volume")

propagating:

- tf.output.efs_access_mount_dns_name

- type: org.apache.brooklyn.enricher.stock.Propagator

brooklyn.config:

producer: $brooklyn:entity("efs-bastion-server-grouped")

propagating:

- efs-size-from-server

- type: org.apache.brooklyn.enricher.stock.Propagator

brooklyn.config:

producer: $brooklyn:entity("efs-bastion-server")

propagating:

- tf.output.efs_bastion_server_ip

Adding effectors

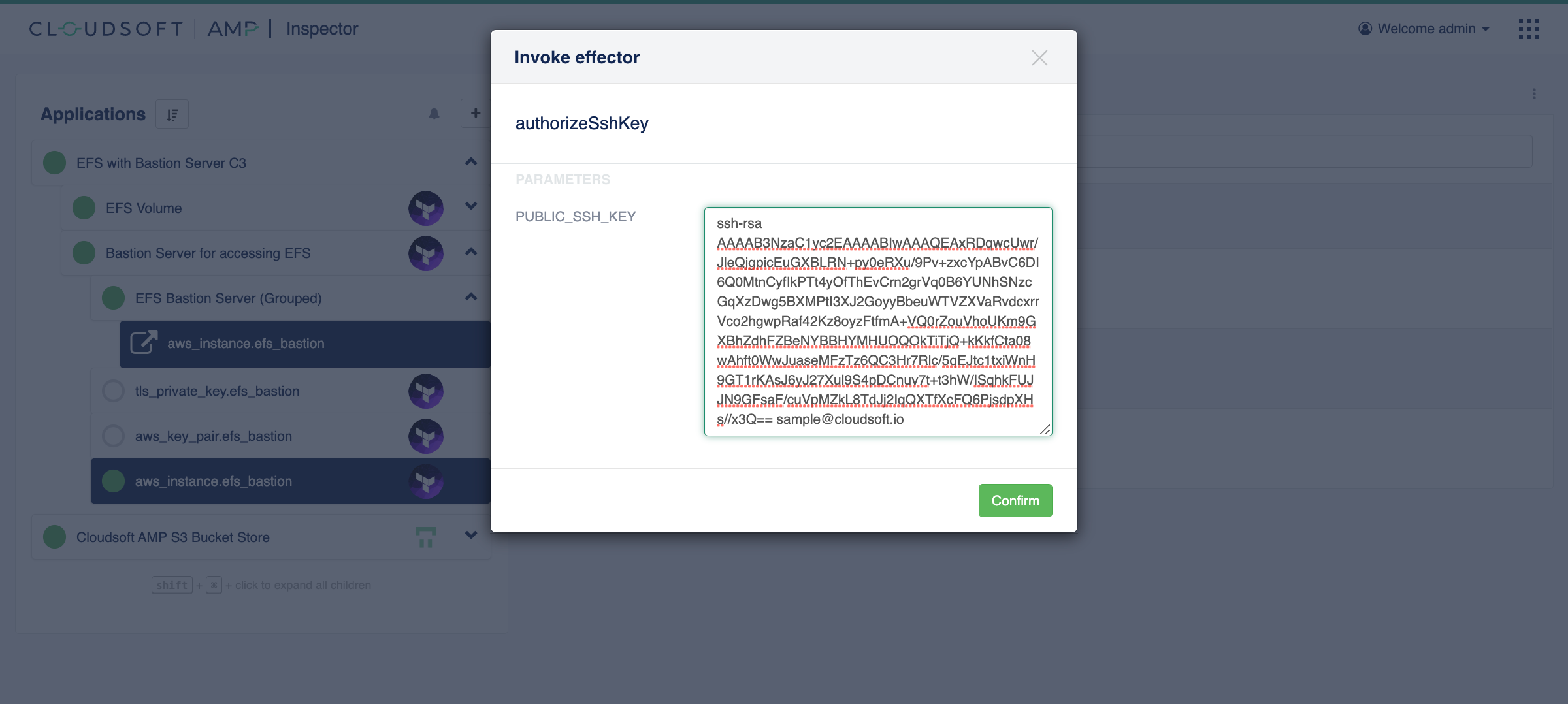

Another area of this blueprint we’d like to improve is the shared use of a generated key to access the bastion server. Ideally, we’d be able to add our own SSH public key. Let’s add an “effector” to do this.

Effectors define actions we can do against entities in AMP. As with sensors, we will add an initializer with workflow. The workflow here defines the steps (or single step, in this case) which should be run when the effector is invoked. The one addition here is the definition of input parameters that a caller should supply.

The initializer we will add looks like this:

type: workflow-effector

brooklyn.config:

name: authorizeSshKey

parameters:

PUBLIC_SSH_KEY:

description: SSH key (public part) to authorize on this machine

steps:

- ssh echo ${PUBLIC_SSH_KEY} >> ~/.ssh/authorized_keys

This will be attached to the same EC2 instance pulled in by “EFS Bastion Server (Grouped)”, in the member.initializers block

after the workflow-sensor. The full blueprint can be viewed here.

Redeploy

Now let’s redeploy:

- Select the existing “EFS with Bastion Server” application in the Inspector and unmanage it, as before

- Copy the blueprint and paste it into the Composer CAMP Editor.

- Switch to the graphical composer and set the

bucket_nameanddemo_nameto the same values as in the previous deployment. - Click “Deploy” and confirm.

Once this infrastructure is fully deployed, navigate to the “Effectors” tab on the aws_instance.efs_bastion node

and note the new effector on the resource: authorizeSshKey.

Invoke this giving the public part of your SSH key, which typically can be found in ~/.ssh/id_rsa.pub

(search for and run the ssh-keygen command if you do not have one).

If your key includes an email address at the end, then this will need to be removed if surrounded by < and >.

As with the initial deployment, all the details of each effector invocation is available in the Activities view,

including the stdin and stdout. The same is true for all sensors and policies;

for example, you can explore the GroupsChangePolicy on either of the dynamic group entities

in the “Management” tab, drill into its activities, and query the associated log messages.

After the effector has successfully done its job, you should be able to SSH to the bastion server

from the command line using your standard key: ssh -o HostKeyAlgorithms=ecdsa-sha2-nistp256 ec2-user@<IP_ADDRESS>.

You could then run cat /tmp/cloudsoft-amp-efs-size-from-server.last-date

to see the breadcrumb left by our on-box sensor.

And let’s take a quick look at our new sensors:

Initializing entities and adding custom configuration

The authorizeSshKey effector has a flaw: any key added will be added immediately but then

not remembered by the model, and it will be lost if we stop and restart the bastion server.

To make the keys persist across a restart, we need to have the keys to authorize part of the model.

Adding a new brooklyn.config key, e.g. authorized_keys, is the way to do this.

We have a choice of how to make it install when the server starts.

One common pattern with Terraform is to define authorized_keys also as a variable in our Terraform,

and pass it by setting tf_var.authorized_keys: $brooklyn:config("authorized_keys").

Within Terraform we can use a provisioner to install it or we can use the cloud-init metadata.

These are both fully supported by AMP, but with AMP there is a third option which can give

more flexibility and visibility: we will tell AMP to invoke an effector when the server comes up.

We already have the effector, so we simply need two minor changes:

- Add a

member.invokeargument to theGroupsChangePolicysupplying a list of the effectors to invoke when the member is found; in this case the list will contain ourauthorizeSshKeyeffector - Add a default value for the

PUBLIC_SSH_KEYparameter on theauthorizeSshKeyeffector pointing at the new config key$brooklyn:config("authorized_keys")

This is done by adding the last three lines in the code below:

# in the GroupsChangePolicy on the EFS Bastion Server (Grouped)

member.initializers:

# ... (omitted for brevity)

- type: workflow-effector

brooklyn.config:

name: authorizeSshKey

parameters:

PUBLIC_SSH_KEY:

description: SSH key (public part) to authorize on this machine

# the following line added here to set a default from entity config

defaultValue: $brooklyn:config("authorized_keys")

steps:

- ssh echo ${PUBLIC_SSH_KEY} >> ~/.ssh/authorized_keys

# the following lines added here to auto-invoke on entity discovery from TF

member.invoke:

- authorizeSshKey

We can now set an authorized_keys config key in our blueprint at deploy time,

and it will be installed on the bastion server. We can update this at any time

via the API, and re-run authorizeSshKey to apply it, and have confidence the

updated value will always be applied in the future.

To make it more usable and modifiable (“reconfigurable”) in the UI at the deployment stage and then later during

management, we will also declare authorized_keys as a parameter in our blueprint, with the last six lines below:

brooklyn.parameters:

- name: demo_name

# ... (omitted for brevity)

- name: bucket_name

# ... (omitted for brevity)

# new parameter

- name: authorized_keys

description: >

This optional parameter allows additional authorized_keys to be specified and

installed when bastion servers come up or whenever the `authorizeSshKey` effector is invoked.

pinned: true # this makes the parameter shown by default

reconfigurable: true

AMP DSL entity references

⌃

AMP DSL entity references

⌃

Improving behaviour and security

⌃

Improving behaviour and security

⌃

Redeploy

To deploy, as before, unmanage the previous deployment,

copy the latest blueprint

to the composer, set the bucket_name and demo_name parameters as before and add your public key to

the authorized_keys parameter, and deploy.

When deployed, you should be able to SSH in with your default key, ssh -o HostKeyAlgorithms=ecdsa-sha2-nistp256 ec2-user@<IP_ADDRESS>,

and even if you stop and restart a new bastion server, the same should apply with the new <IP_ADDRESS>.

In the AMP UI, on the “aws_instance.efs_bastion” entity, you can see the authorized key installation in

Inspector -> Activities.

This inspectability is one major benefit over the cloud-init and provisioner approaches.

You can also replay the effector if there are issues, or if the configuration changes;

for example expand the “Config” section on the “Summary” tab for the root “EFS with Bastion Server C3” entity,

and you will see that the authorized_keys parameter is editable. If you entered it wrongly, you can correct it;

or if you want to change it or add a new one permanently, you can do it here.

What have we learned so far?

There are several new concepts brought to bear in the last blueprint here, some quite advanced, but now you know most of what is needed to define sensors and effectors, on-box or off-box, and to use this effectively with config. Once this is defined and captured, the blueprint can then be shared, installed to the AMP Catalog, and re-used elsewhere.

The next exercise will use sensors and effectors as the basis for several “policies”, for drift-detection, schedules, size warnings, and security scan compliance, and will conclude by adding it to the catalog for use as a building block in the Exercise 2 series.